They say when gold is advertised on commercials that you know we’ve reached the height of an economic cycle. Well, if there’s an equivalent for technology overtaking our world, we’ve found it. Mark it: 2023 is the Year of AI-Everything.

We’ve found our gold-at-midnight for AI. At a recent children’s puppet show, in the least-tech-savvy-theater-possible, the plot was about farm animals replaced by technology (the rooster by an alarm clock, the donkey by a tractor, and the dog by a fence, etc.). Ouch. Or, should we say, A-I-A-I-uh-oh.

AI is here, no doubt. But will it stay, and how, and what will the future look like? We’re not sure, but we have contemplated its impact on the marketing industry. And lately, when scrolling social feeds or skimming daily newsletters, we can’t escape ChatGPT and how it could change the way we work, forever.

Related: 2023 Marketing Trends: To Be or Not To Be?

The jury’s still out on whether ChatGPT, and AI generally, will solve all our problems or create more serious ones. Two years from now, will we be asking: Remember when everyone was using ChatGPT for a few months … what ever happened to it? Or, we could be wondering how we ever did our jobs without it. In other words, will it be transformative like Microsoft Excel or a flop like Google Reader? As of today, we’re taking the middle ground on ChatGPT for marketers.

A recent LinkedIn post claimed that ChatGPT is like a free employee. Disclaimer: if you know how to use it properly. After our eyes stopped rolling, we thought, what if ChatGPT was a colleague at Hencove—and how would we rate their performance during an annual review? So, without further ado, we’re pleased to introduce you to our newest colleague, Rob Ott, a three-month-old chatbot.

While we wait for more proof on the future of ChatGPT, here’s how we’d rate Rob Ott’s performance, from 1 (poor) to 5 (excellent), as a colleague.

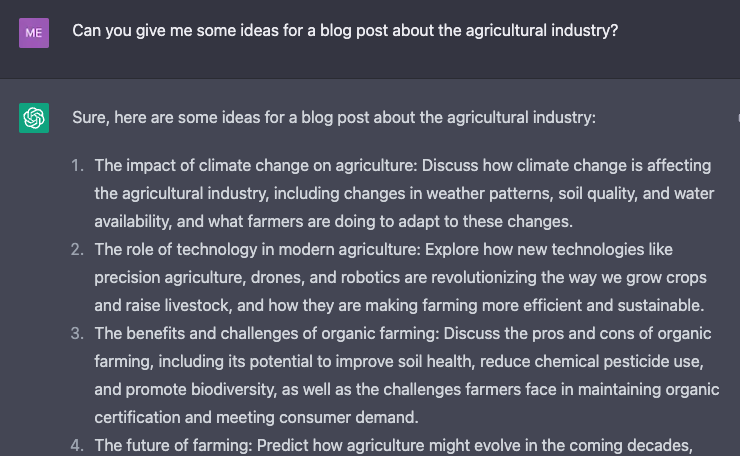

Brainstorming Ability | Rating: 3

Staring at a blank canvas or putting the first words on a page is lonely. Sometimes you need a creative jumpstart. Rob appears to be pretty good at producing a bunch of ideas about an array of topics. For example, we asked Rob to give us ideas for a blog post about the agricultural industry. We were pleasantly surprised—they spit out 10 well-rounded topics with a basic outline. After choosing a topic, the work has just begun, but we see the value in Rob’s ability to get the creative juices flowing.

On the other hand, we’re skeptical of Rob’s skills beyond the basics. We tasked Rob with brainstorming a new brand name for a robotics company. At first, the names looked pretty legit. But after a quick search, some of the names were too legit—as in, they are existing organizations. You can leave the name change process to us, Rob.

Related: Renaming Your Business is a Big Deal: Five Reasons to Go For It

Writing Ability | Rating: 2

To produce high-quality, long-from content—such as a whitepaper, eBook, or blog post—the author needs to understand the topic at hand, which comes from direct experience, or at the very least, hours of research (like how we went to a puppet show to write this blog!). But, despite “intelligence” in the label, AI language models like Rob don’t exactly know anything. These tools are trained to recognize patterns within gigantic gaggles of words from the web, then piece them together to produce a logical response.

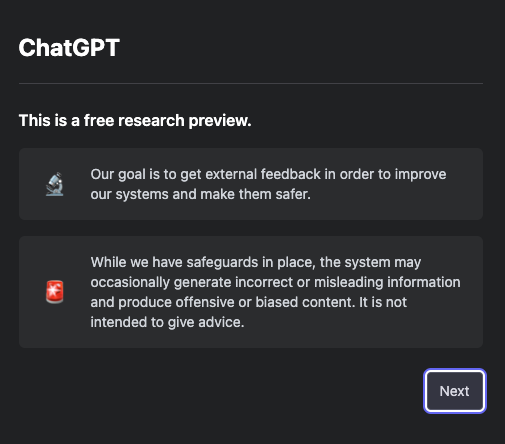

When you log into ChatGPT for the first time, it admits off the bat that it may be wrong, offensive, or biased. Then, it continues to confess its limitations: may occasionally generate incorrect information; may produce harmful instructions; and limited knowledge of world and events after 2021. A lot has changed in the primary industries we work in—healthcare, finance, and technology—and time-stamped knowledge could pose a significant challenge.

The internet poses enough information risks, the last thing we need are AI tools spreading additional disinformation. Even one of ChatGPT’s founders agrees it should be regulated. Another AI expert believes ChatGPT “hallucinates,” or makes stuff up, 20% of the time. We’ll have to ask Rob what they think of those odds.

We work with clients in industries where information accuracy is obligatory, not optional. And like us, Google takes pride in promoting information from reliable sources. Earlier this month, they issued guidance to web publishers about AI-generated content, cautioning that it will be extra vigilant when elevating health, civic, or financial information in its search results. If we’re doing research for healthcare content, we’d feel a lot more confident finding up-to-date information and data from dependable sources and approved organizations, like the CDC, not ChatGPT.

Plus, whether we’re writing for Hencove or a client, we live and breathe that brand’s personality. Cough, cough, sorry Rob, but you weren’t exactly the life of the recent team outing. While you can tell it to “act” a certain way, we prefer when humans write for humans.

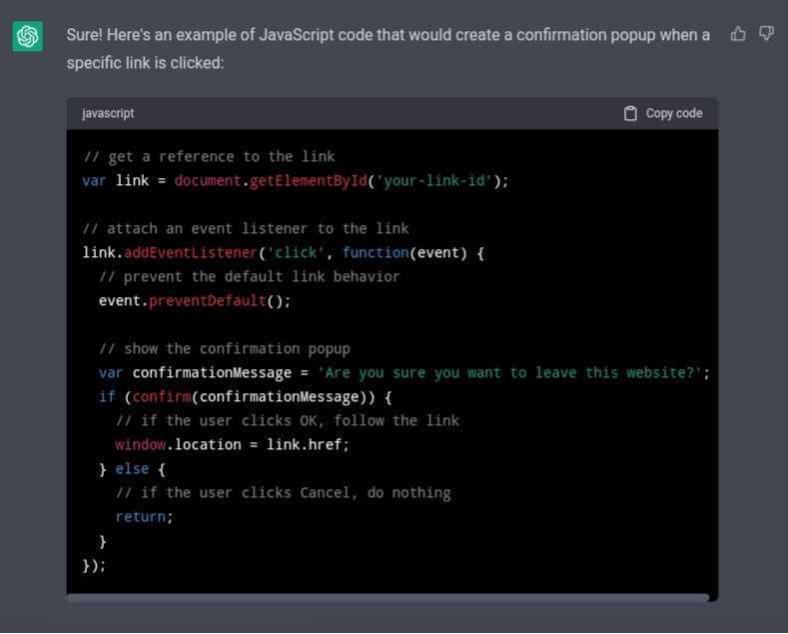

Development Ability | Rating: 3

When it comes to coding, this is where Rob’s skills get more interesting. We asked Rob to write a small piece of code for a very specific task: Create a JavaScript code that would make a pop-up appear warning the user they are leaving a website. We compared Rob’s code to human-generated code, and it was almost spot-on. After we provided a little more direction, Rob eventually got the gist and adjusted the output to a functional code.

But Rob didn’t spend their summer at coding camp. They combed through and read coding sites like Stack Overflow to figure out how to piece together the correct code. Now, a web developer might take the same approach, albeit at a slower pace.

Undeniably, Rob excels at writing simple code that requires little to no creative thinking. Or debugging a massive dataset. Or adding descriptive comments to code. These relatively minor, straightforward tasks can consume a developers’ day; this is where an AI language model could save us time. Even though Rob can technically build websites, they are painfully basic. We lack confidence in Rob’s ability to create complex code or design an impressive, user-friendly website.

Demonstrates Our Values | Average Rating: 2.8

As a values-driven organization, we’re committed to doing the right thing, even when no one is watching. And we pride ourselves on being exceptional communicators. As a machine, this is where it gets dicey for Rob. Like any employee, we evaluated their ability to demonstrate our five company values.

- Honesty | Rating: 3. If nothing else, Rob is open about their shortcomings. And while we believe Rob tries to be genuine, they still need a fact-check filter.

- Hard Work | Rating 5. There’s no doubt Rob is a hard worker; you can ask them infinite questions and they always have an answer. And, overall, we’re impressed with how efficiently Rob produces outputs.

- Humor | Rating: 2. Rob can be funny when we want them to be. They’re not innately humorous or witty, but if asked, Rob can drop a good pun with the best on our team. Then again, Rob wouldn’t have discretion to avoid humor in the wrong situation. There’s a time and a place for everything.

- Humility | Rating: 1. If we could give Rob a zero here, we would. They simply aren’t capable of feeling emotions. And quite frankly, kind of a know-it-all.

- Curiosity | Rating: 3. Rob claims to be hungry to learn more and become better. But like on a bad date with a one-sided conversation, Rob never asks us any questions, and that stings.

It all boils down to this: we don’t fully trust Rob, or ChatGPT, yet. But people were unsure about Google in its early days, too. We should remember that ChatGPT is still in its infancy and probably isn’t going away. We’re curious about its future capabilities and are open to learning how to highlight its strengths. But we also have our authenticity radar on. If we can figure out how to work well with a chatbot like Rob, and utilize them to work smarter, without damaging the quality of our work, we’re all ears.

What are your thoughts about ChatGPT? We’d love to hear what you think.